New AI algorithms streamline data processing for space-based instruments

The International Space Station, where Steve Chien and his team prototyped a new set of AI algorithms that will reduce data latency and improve dynamic targeting capabilities for satellites. (Credit: NASA / ISS)

08/22/2022 – Earth-observing instruments can gather a world’s worth of information each day. But transforming that raw data into actionable knowledge is a challenging task, especially when instruments have to decide for themselves which data points are most important.

“There are volcanic eruptions, wildfires, flooding, harmful algal blooms, dramatic snowfalls, and if we could automatically react to them, we could observe them better and help make the world safer for humans,” said Steve Chien, a JPL Fellow and Head of Artificial Intelligence at NASA’s Jet Propulsion laboratory.

Engineers and researchers from JPL and the companies Qualcomm and Ubotica are developing a set of AI algorithms that could help future space missions process raw data more efficiently. These AI algorithms allow instruments to identify, process, and downlink prioritized information automatically, reducing the amount of time it would take to get information about events like a volcanic eruption from space-based and planetary instruments to scientists on the ground.

These AI algorithms could help space-based remote sensors make independent decisions about which Earth phenomena are most important to observe, such as wildfires.

“It’s very difficult to direct a spacecraft when we’re not in contact with it, which is the vast majority of the time. We want these instruments to respond to interesting features automatically,” said Chien

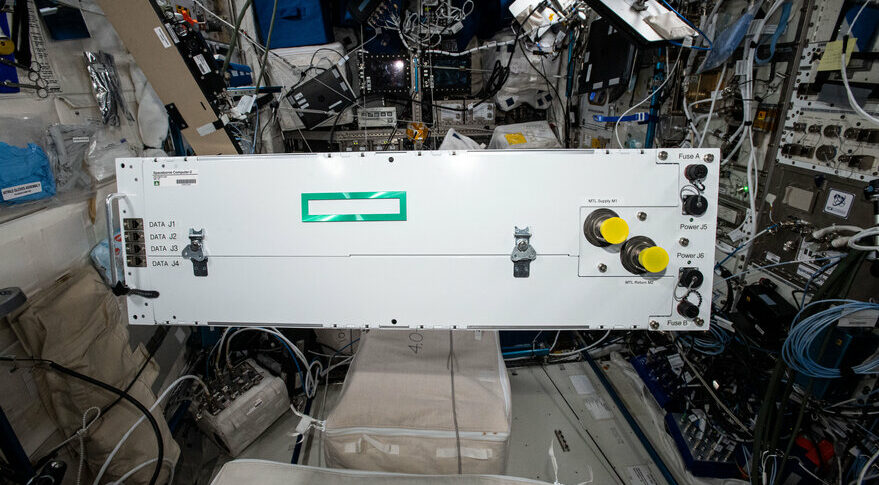

Chien prototyped the algorithms using commercially available advanced computers onboard the International Space Station (ISS). During several separate experiments, Chien and his team investigated how well the algorithms ran on Hewlett Packard Enterprise’s Spaceborne Computer-2 (SBC-2), a traditional rack server computer, as well as on embedded computers.

These embedded computers include the Snapdragon 855 processor, previously used in cell phones and cars, and the Myriad X processor, which has been used in terrestrial drones and low Earth orbit satellites.

Including ground tests using PPC-750 and Sabertooth processors – which are traditional spacecraft processors – these experiments validated more than 50 image processing, image analysis, and response scheduling AI software modules.

The experiments show these embedded commercial processors are very suitable for space-based remote sensing. This will make it much easier for other scientists and engineers to integrate the processors and AI algorithms into new missions.

The full results of their experiments were published in a series of three papers at the IEEE Geoscience and Remote Sensing Symposium which can be accessed through the links below.

The Spaceborne Computer 2 aboard the ISS, which allowed Chien to verify that his algorithms could not only run effectively on compact commercial processors, but also that those processors were fit for space-based applications. (Credit: NASA)

Chien explains that while it is easiest to deploy AI algorithms from ground computers to larger, rack mounted servers like the SBC-2, satellites and rovers have less space and power, which means they would need to use smaller, low-power, embedded processors similar to the Snapdragon or Myriad units.

By processing the data onboard, these AI algorithms prevent important or urgent information from being buried within larger data transmissions. A researcher wouldn’t have to downlink and process an entire transmission to see that a hurricane is intensifying or a harmful algal bloom has formed.

“A large image could have gigabytes of data, so it might take a day to get it to the ground and process it. But you don’t need to process all that data to identify a wildfire. These algorithms pre-process data onboard so that researchers get the most important information first,” said Chien.

These algorithms could be useful not only for Earth-observing instruments, but also for instruments observing other planets as well. The proposed Europa Lander mission, for example, could use Chien’s algorithms to help search for life on the Jovian moon.

“There are several missions that are in concept development right now that could use this technology. They’re still in the early phases of development, but these are missions that need the kind of onboard analysis, understanding, and response these algorithms enable,” said Chien.

The team is also testing neural network models to interpret Mars satellite imagery. “Someday such a neural net could enable a satellite to detect a new Impact Ejecta, evidence of a meteorite impact, and alert other spacecraft or take follow-up images,” said JPL Data Scientist Emily Dunkel. “Rovers could also use these processors with neural networks to determine where it is safe for the rover to drive,” Dr. Dunkel added.

“We used the CogniSat framework to deploy models to the Myriad X, reducing the effort to develop deep learning models for onboard use. This experience helps prove that this advanced hardware and software system is ready now for space missions,” said Léonie Buckley, Senior Engineer at Ubotica.

As climate change continues to alter the world we live in, information systems like Chien’s allow scientific instruments to be as dynamic as the Earth systems they observe.

“We don’t often think about the fact that we’re walking around with more computing power in our cell phones than supercomputers had forty years ago. It’s an amazing world we live in, and we’re trying to incorporate those advancements into NASA missions,” said Chien.

NASA’s Earth Science Technology Office funded this project in collaboration with NASA Earth Science Division (ESD) Research & Analysis and Flight Programs, respectively.

Publications:

https://ai.jpl.nasa.gov/public/documents/papers/IGARSS2022-Onboard-Not-DL-IGARSS2022-Camera.pdf

https://ai.jpl.nasa.gov/public/documents/papers/IGARSS-2022-DL-Movidius-camera.pdf

https://ai.jpl.nasa.gov/public/documents/papers/IGARSS_2022_Candela_DT.pdf

Gage Taylor, NASA Earth Science Technology Office